Data-driven decision making has become a crucial aspect of running a successful business in the digital age. In order to stay competitive, it is essential that you implement effective data observability practices.

The concept of data observability has garnered increasing attention in the data industry as data-driven approaches to business have become more prevalent, and the importance of data observability has only grown.

In this article, we will explore the basics of data observability, different data observability models, and the benefits of implementing data observability in your organization.

What is data observability?

First, let’s define the concept of data observability.

In simple words, data observability is the ability of an organization to track and control its data landscape and multilayer data dependencies (pipelines, infrastructure, applications) at all times. The key aims of data observability are detecting, preventing, controlling, and remediating data outages or any other issues disrupting the work of the data system or spoiling data quality and reliability.

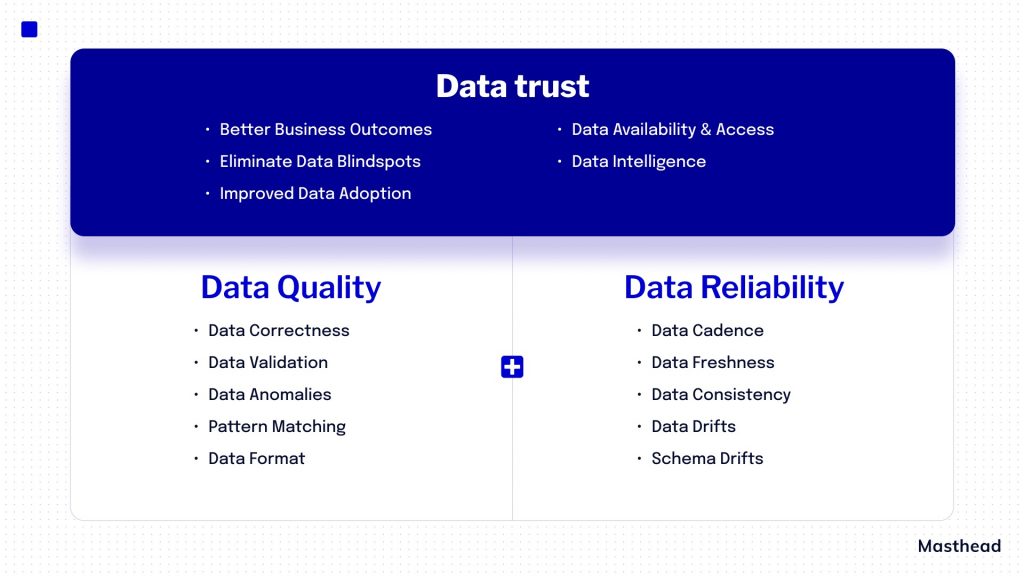

Data quality and data reliability are the fundamental pillars of data trust.

Why is data trust so important? To answer this question, let’s take a look at the benefits companies receive from well-tuned data observability practices.

What benefits does data observability bring you?

Without further ado, let’s outline the main advantages of data observability.

1. Data-driven decision-making done the right way

Ensuring the quality and reliability of your data is crucial for making informed and successful business decisions. Poor data quality can result in costly mistakes, but with data observability, you can quickly identify and resolve any issues, such as anomalies, inconsistencies, or drifts that may arise.

By accurately tracking and analyzing your data, you can make better decisions that boost profits, strengthen your competitive advantage, and improve customer satisfaction. Investing in data observability is a smart move that pays off in the long run.

2. Increased efficiency

Proactive data management is key to maintaining the integrity and accuracy of your data. If you wait too long to address data issues, they can quickly escalate and become more difficult to resolve. Data problems can spread throughout your infrastructure, so it’s essential to address them as soon as they are detected.

Real-time data observability is a valuable tool for ensuring timely response to data anomalies. With the right observability solution, you can receive notifications of any issues as they arise, allowing your data team to investigate and fix the problem promptly. By responding quickly to data anomalies, you can minimize the impact on your business and keep your data infrastructure running smoothly.

3. More control over data with data lineage

Data observability is a powerful tool for managing and optimizing your complex data infrastructure. By providing visibility into the various components and data flows within your system, you can gain a better understanding of how your data is being used and where potential issues may arise. This enables you to track the journey of specific data sets from their injection into the system to their final destination, helping you to effectively manage your data infrastructure.

Additionally, data observability allows you to pinpoint the root cause of any data problems, down to the individual field level. This granular level of detail is crucial for identifying and resolving issues quickly and efficiently. Overall, data observability is an invaluable resource for ensuring the smooth operation of your data infrastructure.

How does data observability work?

There are three basic approaches to achieving data observability, whether through custom tools or off-the-shelf solutions. Let’s take a quick look at each of them.

1. Observing data. This approach involves monitoring data for various metrics, anomalies, or corresponding metadata. While this approach can be effective for detecting potential issues, it does have its limitations. One potential drawback is that it may require scanning to say, simply – run all necessary queries against your data. That could potentially violate security or confidentiality protocols in certain industries. This highlights the importance of finding a data observability solution that adheres to any relevant regulatory requirements and protects the confidentiality of your data.

2. Observing data pipelines. Solutions that observe pipelines help you identify and troubleshoot issues within data pipelines by analyzing metrics related to data transformation and detecting suspicious or irregular events. These solutions are focused specifically on the processes occurring within the pipelines and can provide valuable insights into the interactions between data pipelines and apps/code. By utilizing data observability for data pipelines, you can optimize the performance of your data pipelines and ensure the smooth flow of data throughout your organization.

The downside of this approach is that it is focused on the process of data delivery rather than on data stored in tables or end reports in dashboards or reports.

3. Observing data infrastructure. This data observability approach involves tracking logs and metrics related to infrastructure resource consumption in order to detect data anomalies without directly accessing the data. This approach allows you to identify a range of data anomalies, including freshness, volume, schema changes, deleted tables, and other data errors that may impact data quality. While this can be a useful method for identifying issues, it does have some limitations, including the inability to drill down to column-level values within tables. Despite this, this approach can still provide comprehensive insights into the overall health and quality of your data.

Should you build or buy a data observability tool?

When deciding between a custom data observability tool and a pre-packaged solution, it’s important to consider the following questions:

1. Are you confident that you can build something better than an existing product driven by a solid tech team, and experience from many years in the market?

The best data observability solutions are developed by experts with a strong understanding of the data issues covered by their product.

2. Are you ready to wait X time and invest strategically to build a custom solution?

While the initial cost of implementing a custom data observability tool may not seem significant, it’s important to factor in additional expenses that may arise. These can include unexpected tasks and issues, such as bug fixes, which can increase labor costs. It’s also important to consider the opportunity cost of investing time and resources into the development of a custom tool, as this could potentially divert attention and resources away from other important business needs. Ultimately, it’s important to carefully weigh the potential benefits and costs of a custom data observability solution before making a decision.

3. Did you consider handling maintenance and support of your custom observability solution over time?

It’s not uncommon for engineers to recommend a custom solution due to its perceived simplicity of implementation. However, it’s important to remember that custom solutions require ongoing maintenance, updates, and optimization to ensure they are running smoothly and effectively. On the other hand, pre-packaged, out-of-the-box options offer a ready-to-use solution that has been tested in the market and is supported by a team of experts. While a custom solution may seem appealing at first, it’s important to carefully weigh the long-term maintenance and support requirements against the benefits of a pre-packaged option.

After carefully considering all of the relevant factors, a pre-packaged, out-of-the-box data observability tool may emerge as the most viable option for your organization.

Stay on top of your data landscape with Masthead

If you’re looking for a reliable data observability solution that can help you keep your data secure and your cloud billing costs under control, Masthead is worth considering. This powerful no-code solution offers a range of valuable features, including anomaly detection, column-level lineage, and real-time issue alerts. With Masthead, you can proactively identify and fix data errors before they become a problem for your data users.

If you have any questions about Masthead or would like to learn more, don’t hesitate to get in touch with us.