When we think about software observability, the first companies that come to mind are Datadog, New Relic, and Splunk. The value they deliver to organizations is ensuring that the software and services are up and running as expected, While doing so, none of these third-party applications request access to the customer’s codebase; everything is done by monitoring log files only.

In other words, software observability is achieved exclusively by monitoring logs. Moreover, monitoring logs and not accessing a company’s core intellectual property (software code) or customer data is the only way to deliver comprehensive observability.

Data Observability is greater than Data Monitoring

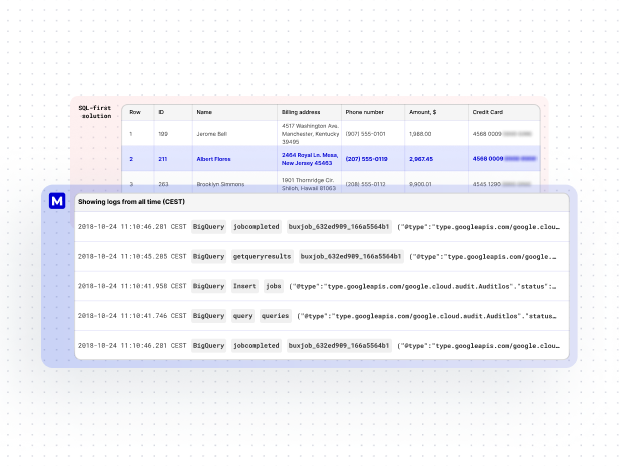

In my work, I speak with various companies that have been fortunate to adopt data observability and achieve results with it. However, to my surprise, when I talk to companies that have adopted a third-party SQL-first data observability solution, I often find that these solutions are allocated to specific data sets, which do not necessarily contain sensitive data like credit card numbers, customer emails, or names. The visibility of SQL-first data observability solutions tends to be limited to data sets with aggregated data, such as marketing or product data. When I ask customers of these SQL-first solutions about their awareness of what is happening upstream and downstream in other data sets that have, or potentially have, sensitive data, they usually respond affirmatively. They confirm that their security team did not allow the vendor access to read this sensitive data.

I have a lot of mixed feelings at this point. However, we must first embrace the idea that if you’ve implemented a SQL-first solution on a dataset or somehow otherwise limited access to your data, it’s not truly data observability – it’s a monitoring system. It lacks the necessary level of coverage to be considered observability; at best, it’s observability for a specific dataset. To put it simply, it’s not a comprehensive system but rather a monitoring system. This essentially means you’ve adopted a data quality monitoring solution, which could be perfectly adequate for the needs you have with data at that point.

Data products are outputs of IT and Data infrastructure that are designed to create business outcomes, while Data Mesh guides and supports data architecture that meets business requirements and enables businesses to extract value from data faster.

The Need for Comprehensive Data Platform Monitoring

But this raises a question: Do you really know what is happening in your data platform if you’re only monitoring the outcomes of some pipelines/models in certain tables/dashboards? The analogy here is ensuring that the water quality at your kitchen sink is within acceptable limits without ever checking the pipes in case they are leaking somewhere in the basement or the water quality in your guest bathroom, which you seldom use.

I am not saying that SQL-first solutions don’t work. These solutions do an excellent job of ensuring data quality, which obviously cannot be disregarded. Meanwhile, a data platform, as a complex system with multiple layers of user access, can have problems beyond the monitored dataset.

At one crucial moment, something went wrong with a Python pipeline that ingests data from an internal app. Although there were monitoring systems in place for it, the problem was that these systems were built by a team member who left the company a year ago. The person responsible for maintaining this pipeline did not know who actually used it, so when they updated it, the pipeline started duplicating the data. That leads to problems that no one ever of for a long time until data users discover that data was not correct for an unknown period of time and reduce the use of the data source from a vendor. (This is a real-life example.) The bottom line is that monitoring specific tables is monitoring for known unowns, but monitoring the whole system at scale is actually facing the unknown unknowns.

Advantages of Leveraging Logs and Metadata for Enhanced Data Platform Reliability

Here’s the thing: data platforms are evolving at the speed that business requires, and keeping up with all the monitoring and coverage of it can be challenging and resource-consuming. Data quality is important, but it does not provide the coverage that a data platform requires as a system to be effectively monitored. It necessitates real-time log monitoring, similar to software systems.

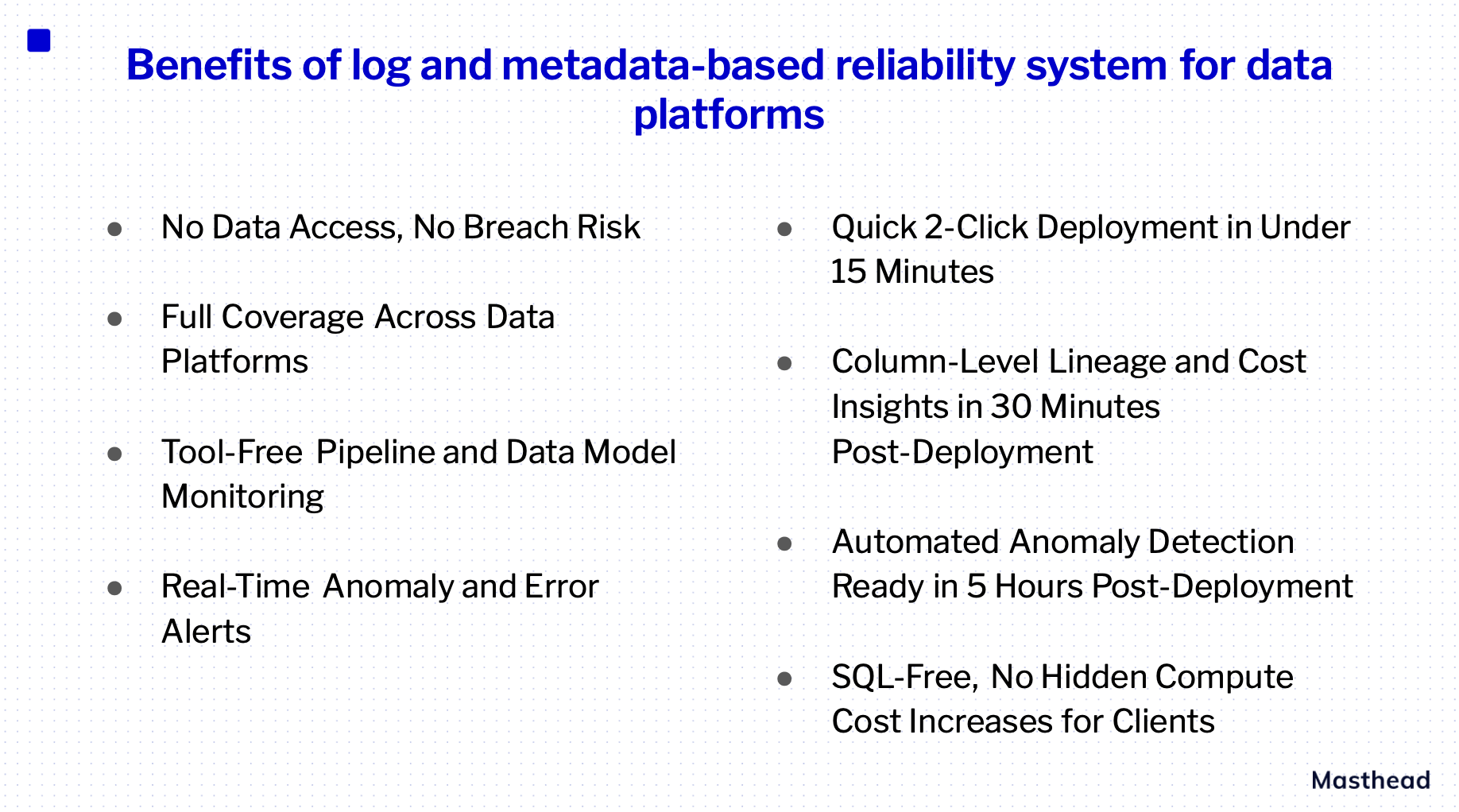

A log and metadata-based reliability system for data platforms offers several benefits, including:

- No read or edit access to client data, architecturally posing no hazard to client data and no risks of data breaches.

- Capturing anomalies and errors in tables and pipelines across the entire data platform.

- Monitoring all pipelines and models running on the client’s data platform without needing to integrate with the solutions powering data ingestion or transformation. It doesn’t matter what system is used (Python scripts, dbt, Dataform, Dataflow, Fivetran, etc.).

- Alerting about anomalies or pipeline errors in real-time.

- Deployment within 15 minutes and full visibility of the process, including lineage, within 30 minutes after deployment.

- Anomaly detection for all time-series tables within the warehouse, available within 5 hours after the deployment of the application.

- No increase in client cloud costs, as monitoring logs and metadata is free of charge, unlike running SQL on a scheduled basis.

Conclusion: Advancing Data Platform Reliability

Ensuring the reliability of a data platform is not limited to ensuring the quality of a certain metric within a table or the freshness of certain tables. Data platforms, which more and more companies are investing in to harvest the benefits of data, require a far more complex level of assurance than running SQL rules against tables. Unlike software, data engineering is in its early stages of development and is still establishing its best practices, frameworks, and standards. This also affects how tools integrate. Ensuring that everything is working as expected in a data platform is crucial yet challenging. Acquiring a solution that can automatically identify the health performance of tables and pipelines across an entire data platform while not accessing actual data and not increasing your compute cost, is a way to shortcut many debugging hours and invest in strategic improvements of the data platform.