Here is our first article from the BigQuery Cost Optimisation series.

As a powerful and scalable data warehousing solution, BigQuery offers tremendous value for businesses of all sizes. However, storage costs can quickly accumulate if not managed effectively. In this article, we’ll dive deep into BigQuery’s storage model and provide you with actionable strategies to reduce your storage expenses.

Google BigQuery costs consist of 2 modules: Storage and Compute.

Google BigQuery pricing is tailored to its product design and architecture, which is why I encourage users to understand how it is built and how it works.

Google BigQuery is a cloud-native data warehouse. Compared to traditional node-based cloud data warehouses or on-premise massively parallel processing (MPP) systems, BigQuery has a serverless architecture, which decouples storage and compute so both can scale independently and on demand.

This structure offers immense flexibility and cost control, as customers don’t need to keep expensive compute resources up and running all the time.” Google documentation.

At Masthead, we’ve observed that the storage typically accounts for 10-15% of BigQuery bills for most customers, though this percentage can vary by company. The majority of the cost comes from compute. In today’s data warehouse landscape, storage is often seen as a commodity, while compute is where these platforms add value and justify premium pricing.

Reducing storage costs is more straightforward than computing, as it can be performed occasionally and shows nice results on GBQ costs. It’s also relatively easier than optimizing GBQ compute costs.

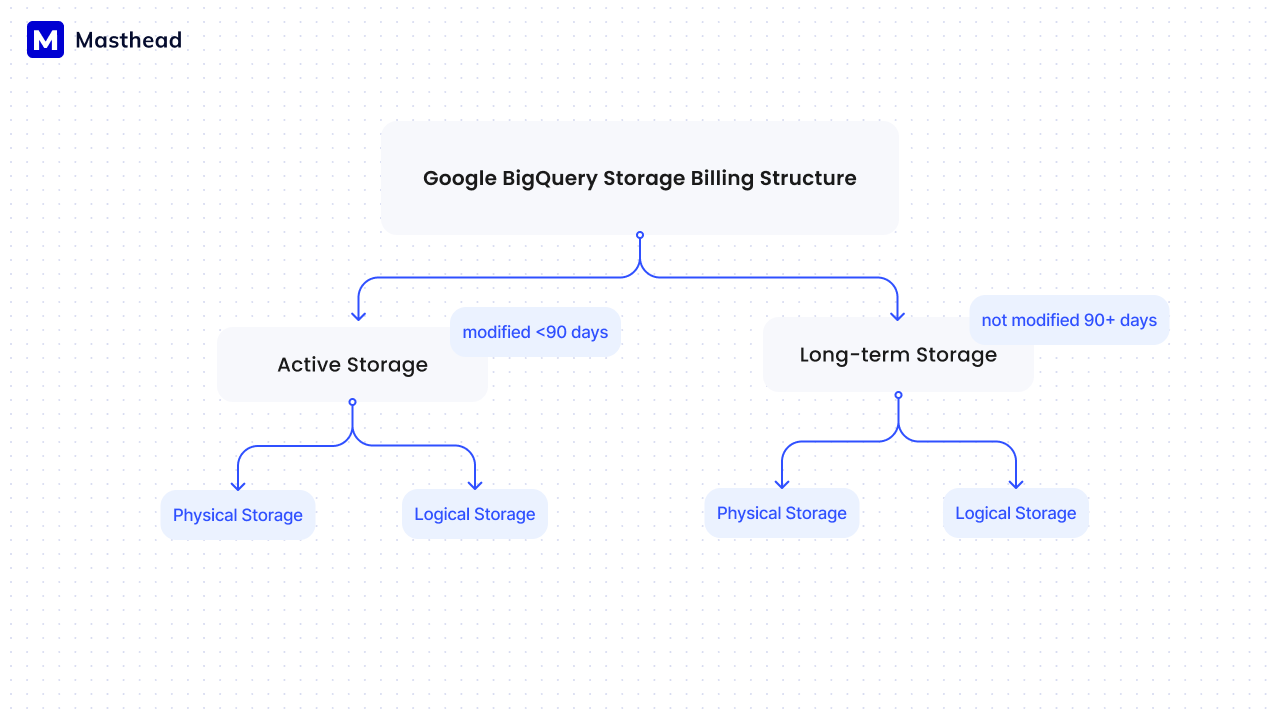

Understanding the billing structure for BigQuery storage

The storage in Google BigQuery is billed per GiB (gibibyte), not GB (gigabyte).

1 GiB = 1.074 GB. It is important to remember this to avoid confusion in further calculations because data storage in Google Cloud Storage is billed in GB (gigabyte).Â

Courtesy of Masthead Data

Active storage includes any table or table partition that has been modified in the last 90 days.

It’s important to understand that modified means being changed using DML, having more data inserted/merged into it, having a cluster added to the column, etc.

“I have noticed many times folk aren’t aware they changed data and freak out seeing a 12TiB (tebibyte) table was changed from long-term to active due to a DML change.” Sayle Mattews

Long-term storage includes any table or partition that has not been modified for 90 consecutive days. The price of storage for that table automatically drops after 90 days of not being used. There is no difference in performance, durability, or availability between active and long-term storage.

Short-term storage is about 50% more expensive than long-term storage. Interestingly, long-term logical storage in the USA Central region is nearly half the price of a standard Google Cloud Storage (BigQuery Logical Long-term storage 1GiB at $0.01 versus 1GiB at $0.02148 Standard Cloud Storage). In the same location, Long-term Physical BigQuery storage costs roughly the same as Standard Cloud Storage (1GiB at $0.02 versus 1GiB at $0.02148).

Courtesy of Masthead Data

Compare the 1GiB storage cost across different storages in BigQuery and Cloud Storage.

So whenever someone advises you to move data to Google Storage, think about which storage it will be and whether it makes sense to get your hands dirty. Generally, using Nearline, Coldline, and Archive Cloud Storage may provide better long-term pricing if you don’t access the data very often (or if ever).

Nevertheless, Archive and Coldline storage in Google Storage is the best option.

You also need to know the difference between Logical and Physical storage to optimize the GBQ storage cost.

The data is not stored in the “cloud” but on the servers.

Physical storage is the amount of data stored on a disk after compression. In other words, it bills per the amount of physical storage it takes on a server to store the data bytes.

Logical storage, on the other hand, is the uncompressed amount of bytes of your data in Google BigQuery, which also includes data retained for time travel and fail-safe storage for the same data.

Time travel functionality allows you to access data from any point within the time travel window. This is the use case for time travel if something was updated or deleted, and you need to check the state of the data before the event. The time travel window is enabled by default and set to a maximum of 7 days.

Fail-safe allows users to restore data an additional 7 days after the time-travel window by opening up a ticket with Google Support.

What is important to know:

- General Physical storage is cheaper than Logical storage in GBQ, except in the cases where compression is not beneficial or the time-travel and fail-safe costs are greater than the savings of compression. This normally happens on datasets where the data is changed a lot. Dataform and dbt doing ELT transforms seem to be one of the biggest culprits for this.

- Logical storage pricing automatically includes retention for time travel and filed-safe storage for the same data. In contrast, physical storage does not include either time travel or file-safe, which is not enabled automatically and is charged separately once enabled.

- Logical storage is enabled for all datasets in GBQ by default.

- Users can change the storage billing model on a dataset level, unlike the compute billing model, which is set on a project level. This can be changed between the two models every 14-days.

- If users set their dataset storage model to physical, time travel and fail safe storage are still automatically enabled, with the physical storage logic (compressed) being billed separately as additional bytes.

Both storage for time travel and fail safe is billed at an active physical storage price. Sayle shared that a little bit can mean a lot if they delete a 10+ TiB table that was in long-term storage and then immediately gets converted to active storage for 14 days. This happened to a customer and really racked up their costs one month.

Ways to optimize Google BigQuery storage cost

- One of the most effective ways is to change the default Logical storage to Physical storage where possible.

In many cases, it shows results between 20% to 70% of optimization in GBQ storage billing but still depends on the time of data contained in tables.

The only thing to remember is that time travel and fail-save are enabled by default in physical storage and should be configured if needed.

This is important, as the minimum number of days that time travel can be set is 2 days, while the fail delete window cannot be configured.

Download Big Query Cost Optimization Guide to get an SQL to manage your default configuration

Link to the detailed GCP documentation guide to changing the storage type: https://cloud.google.com/bigquery/docs/default-configuration

Physical storage is normally cheaper for most tables, but it also depends on the data type and how well it compresses. Logical billing works better for staging data and use cases created and replaced or in cases where the compression does not work well enough for the particular type of data.

Before changing billing models, we suggest:

1. Assess potential cost savings

2. Assess if the time travel option is used and what is the reason

3. Assess the risks of turning the time travel and fail-safe down if your goal to achieve higher savings

Sticking to logical storage pricing might seem like a safe option, even if it means paying extra for that, but there is a workaround. If you want to use physical storage pricing without time travel but still want to have the possibility to restore data, you can re-ingest it and pay for it when needed. If you can afford to do that in batch, you can use a pool of free slots upon availability and do that relatively for free, depending on the volumes of data you need to re-ingest. Grain of salt: it is only available for GC projects using Editions for their compute.

But how do you check the billing model for all datasets in your project? The answer is that, at the moment, there is no easy way to do this. There’s not even a direct method to understand the pricing model of a particular dataset at the moment. The process involves going into the Table Details, scrolling down to the Storage Info section, and seeing what the billing structure looks like.

- Set an expiration date for data you will not be using in the future. One of the use cases we observed was that a Python application, which was transforming clients’ data, was creating temporary tables without setting an expiration term. As a result, over 10,000 relatively small tables accumulated in the clients’ BigQuery with no future use. Deleting these tables alone helped save the client 5% on storage costs overnight. Fortunately, they strategically improved their Python application by setting an expiration date for all temporary tables used in data modeling.

Pro tip : Similar to the dataset and table levels, you can also set up expiration at the partition level. Check out our public documentation for default behaviours.

Wrapping up, understanding BigQuery’s storage model and implementing the strategies outlined in this guide, you can effectively manage and reduce your storage costs. Remember to regularly monitor your storage usage and adjust your strategies as needed to ensure ongoing cost optimization.

Download our complete guide for additional instructions and more detailed information on optimizing your BigQuery storage costs!